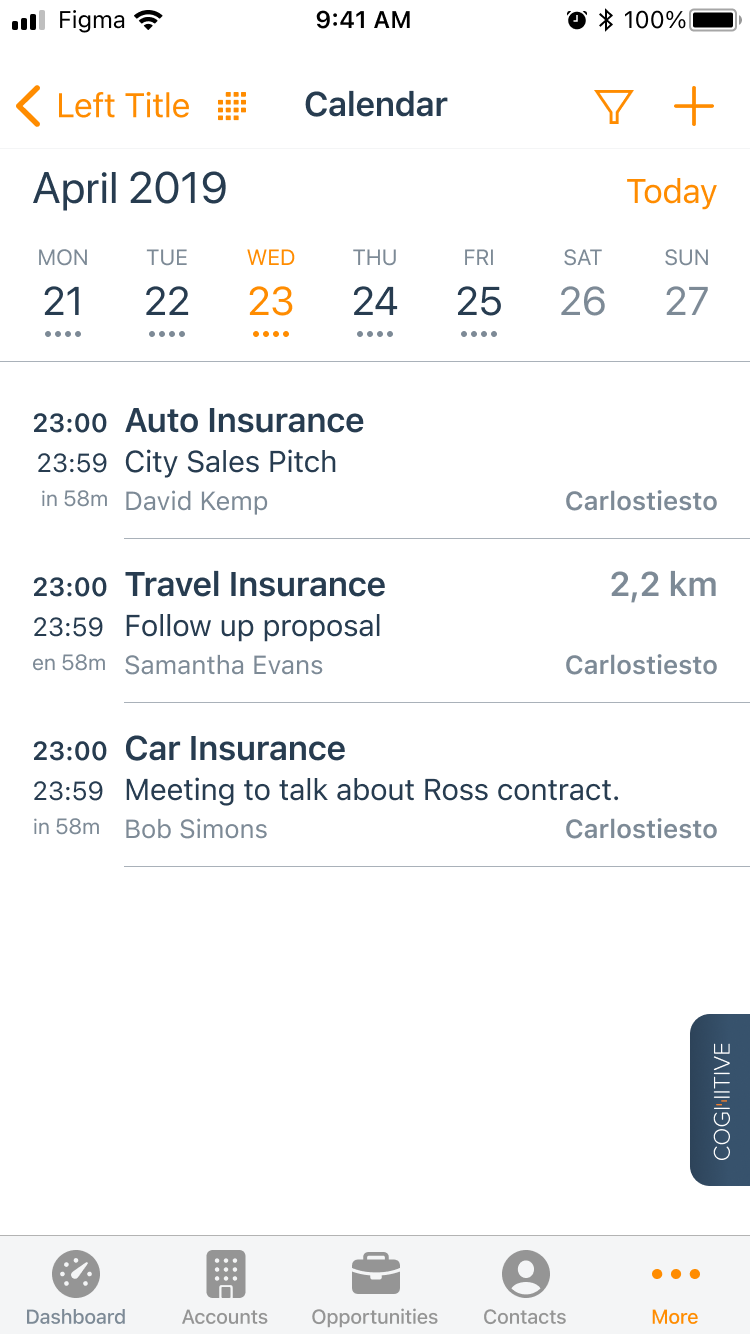

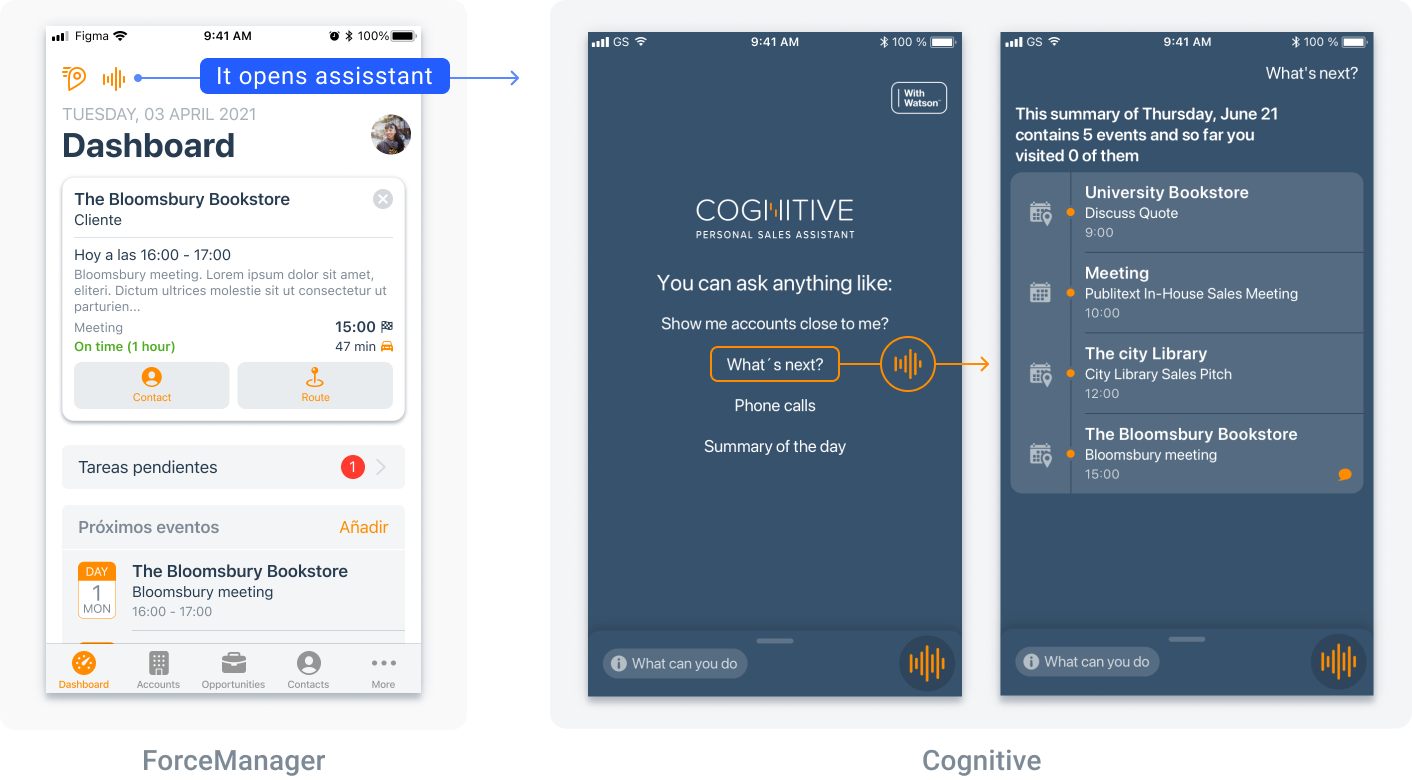

Cognitive is a personal assistant Add-on inside ForceManager CRM that helps to simplify the sales experience through AI and voice interactions. Currently works with FM App and their main feature is to allow uploading data and request information, using IBM Watson® technology based on voice recognition and IA.

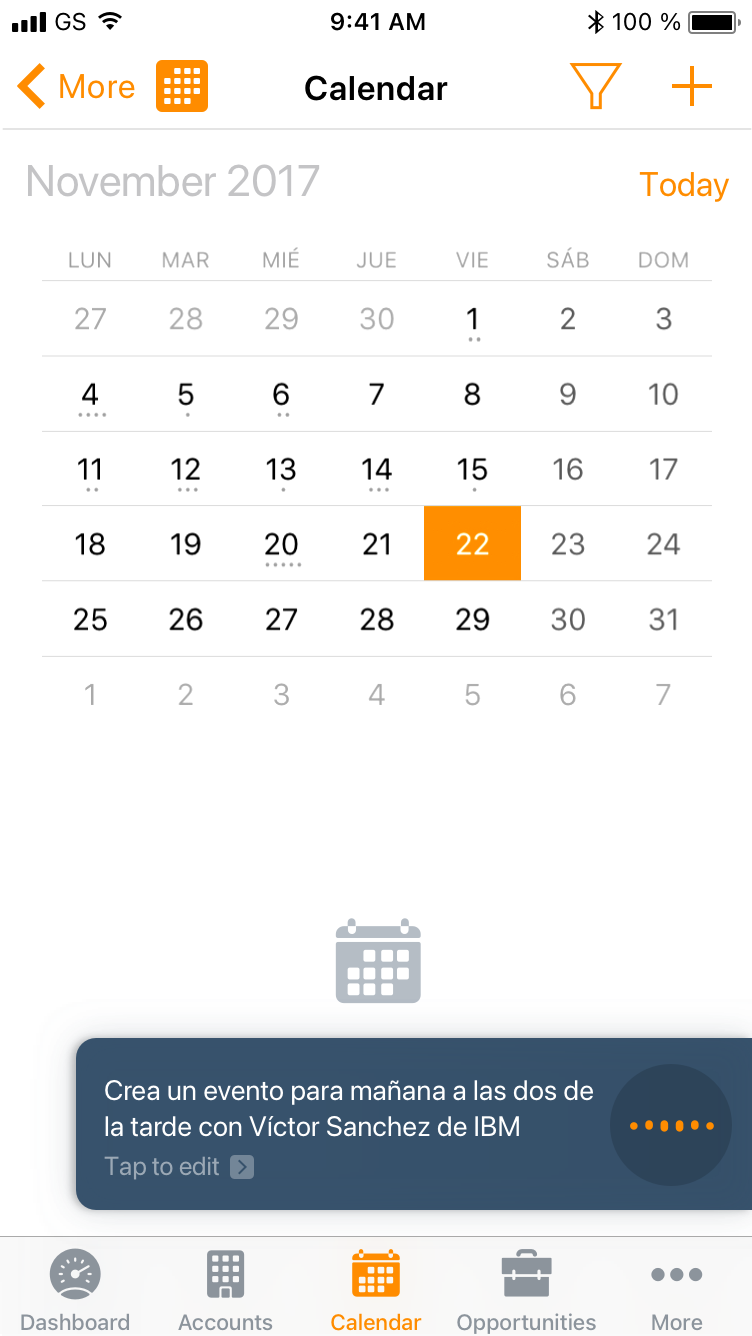

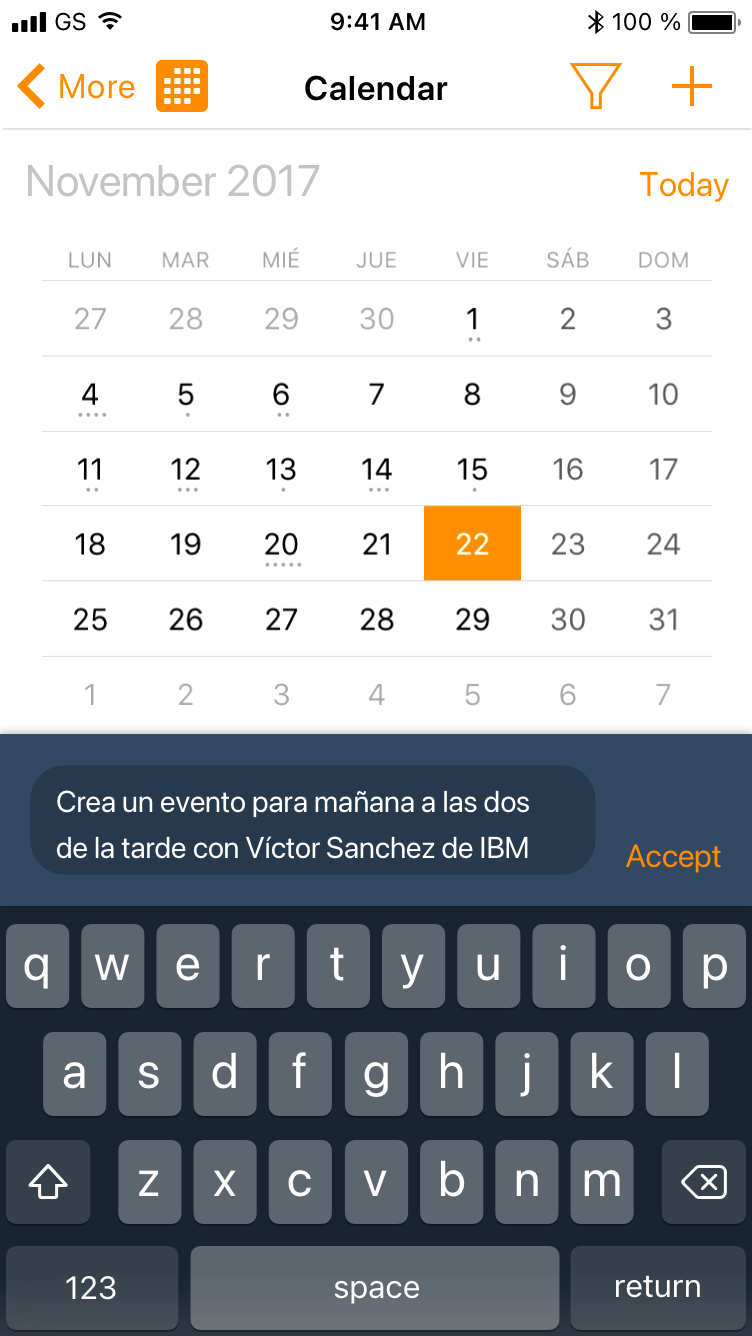

Too many commercials are reluctant to familiarize themselves with voice interaction environments and end up using the keyboard to load data. The problem revolved around finding a way to enable a shortcode that permits creating events or reminders that decrease friction between the keyboard and the different steps of the creation flow.

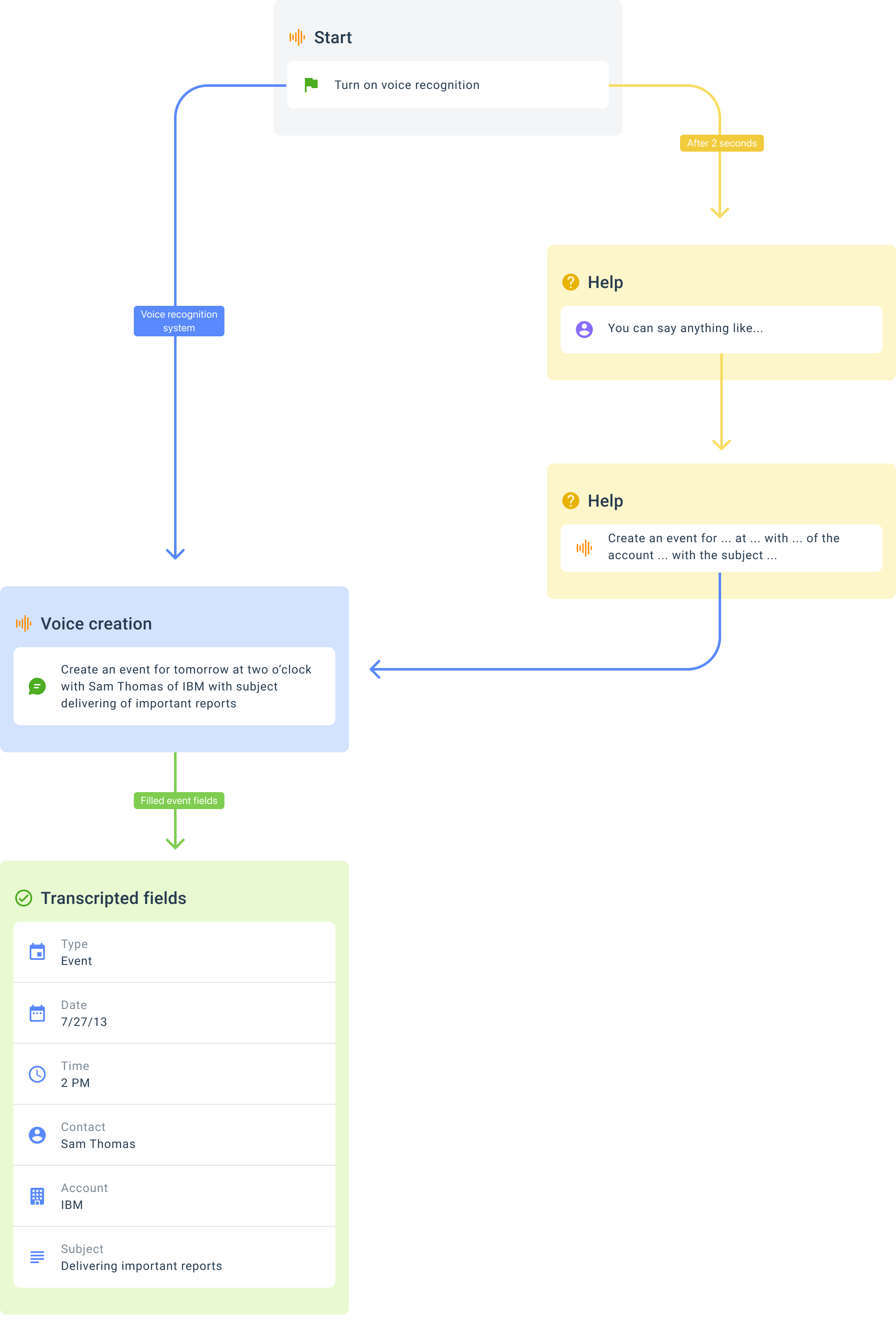

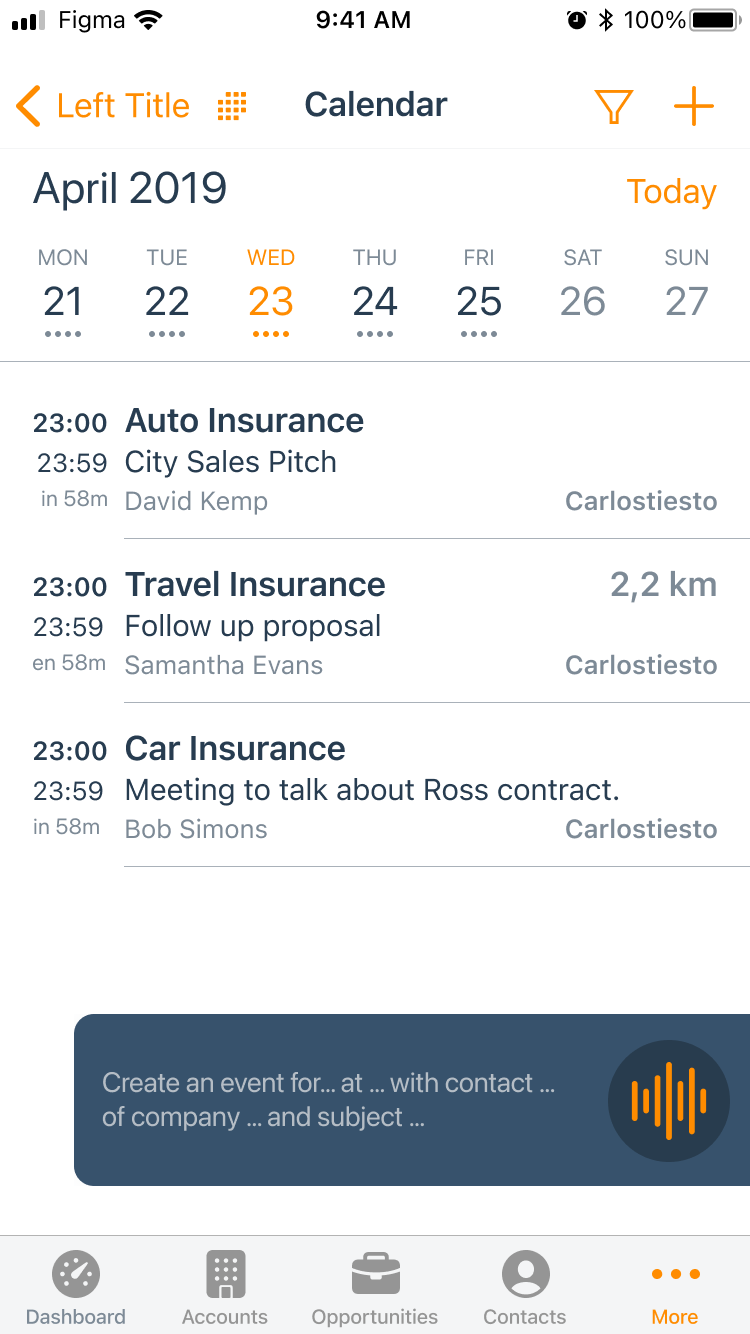

To integrate inside the FM app, a quick action to create events or reminders through voice and the Cognitive Technology. We need to teach users that if they say the field name and its value, the system will be able to match them and generate a field/value to fill out forms with.

Cognitive was built using IBM Watson technology, and we had the initial steps implemented and working. Conversational systems had already been debugged and finished, so we started our research process focusing on the habits and frustrations of users when they work with voice assistants.

The first script that I needed to analyze was the flow that users follow to fill fields, understand it, and find ways to improve the new flow that I was working on.

As we can see, the number of taps that users need to fill each field implies an indeterminate number of interactions between the keyboard and different screens.

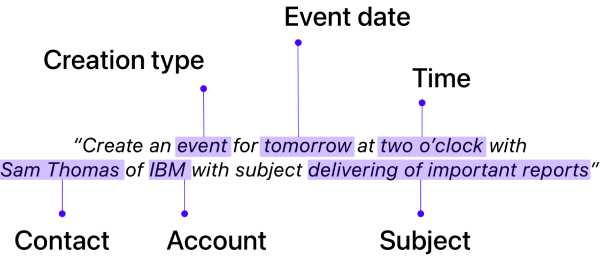

After testing with several users on what was the most common way to interact with voice assistants, we discovered that people tend to simplify their conversations to ensure that their message is processed.

This helped us to discover that messages naturally tend to be structured in a fairly logical way.

Another focus of the research process was to work on different scenarios:

- Expected scenarios

Those cases that are not part of the expected wording, but it'll likely happening. For example, it may be possible to not use field names to say: "...tomorrow at 9am...", "... "this afternoon at 4...". In those cases the identifier of field "Time" will be "AM/PM" or "Afternoon, morning and/or evening...".

- Likely scenarios

It's also quite likely that the user asked for a contact name before knowing the account to which it belongs, so it'll need to allow the assistant to save name user data in the background until it knows the account name to be able to start the search of the contact. For example: “Create an event with Víctor Sánchez at 9am tomorrow of IBM account..."

- Errors management

Error management is within the probable and foreseeable range of possible scenarios, so we have tried to provoke a series of errors during transcription in order to see how users behave.

Once the errors have been debugged we have noticed that as a general rule users tend to block and restart the transcription, which generates some frustration as they do not know which part is being translated correctly and which is not.

To maintain a sense of control we have chosen to include an edit button that will open a window where you can edit all the transcribed content.

Another focus of the research process was to work on different scenarios:

- Expected scenarios

Those cases that are not part of the expected wording, but it'll likely happening. For example, it may be possible to not use field names to say: "...tomorrow at 9am...", "... "this afternoon at 4...". In those cases the identifier of field "Time" will be "AM/PM" or "Afternoon, morning and/or evening...".

- Likely scenarios

It's also quite likely that the user asked for a contact name before knowing the account to which it belongs, so it'll need to allow the assistant to save name user data in the background until it knows the account name to be able to start the search of the contact. For example: “Create an event with Víctor Sánchez at 9am tomorrow of IBM account..."

- Errors management

Error management is within the probable and foreseeable range of possible scenarios, so we have tried to provoke a series of errors during transcription in order to see how users behave.

Once the errors have been debugged we have noticed that as a general rule users tend to block and restart the transcription, which generates some frustration as they do not know which part is being translated correctly and which is not.

To maintain a sense of control we have chosen to include an edit button that will open a window where you can edit all the transcribed content.

In this phase the objective has to revolve around reviewing our hypothesis and perhaps rethinking some key factors such as: Is it easy enough for users to assimilate and learn? Are we getting the expected results? Is the functionality well identified?

We finished around mid 2019 and it had a high impact when it came to assisting sales people in scheduling events and tasks. It is a functionality that is still being used today and the feedback we have received has been quite positive. There have been later versions that sought to introduce more conditions, but after research we realized that adding more complexity to the interaction made the functionality lose its characteristic of being a quick action.

- Conceptualize new product initiatives

- Collect quant and quali data to support design decisions

- Develop and maintain the design system

🤘

Cognitive is a personal assistant Add-on inside ForceManager CRM that helps to simplify the sales experience through AI and voice interactions. Currently works with FM App and their main feature is to allow uploading data and request information, using IBM Watson® technology based on voice recognition and IA.

Too many commercials are reluctant to familiarize themselves with voice interaction environments and end up using the keyboard to load data. The problem revolved around finding a way to enable a shortcode that permits creating events or reminders that decrease friction between the keyboard and the different steps of the creation flow.

To integrate inside the FM app, a quick action to create events or reminders through voice and the Cognitive Technology. We need to teach users that if they say the field name and its value, the system will be able to match them and generate a field/value to fill out forms with.

Cognitive was built using IBM Watson technology, and we had the initial steps implemented and working. Conversational systems had already been debugged and finished, so we started our research process focusing on the habits and frustrations of users when they work with voice assistants.

The first script that I needed to analyze was the flow that users follow to fill fields, understand it, and find ways to improve the new flow that I was working on.

As we can see, the number of taps that users need to fill each field implies an indeterminate number of interactions between the keyboard and different screens.

After testing with several users on what was the most common way to interact with voice assistants, we discovered that people tend to simplify their conversations to ensure that their message is processed.

This helped us to discover that messages naturally tend to be structured in a fairly logical way.

Another focus of the research process was to work on different scenarios:

- Expected scenarios

Those cases that are not part of the expected wording, but it'll likely happening. For example, it may be possible to not use field names to say: "...tomorrow at 9am...", "... "this afternoon at 4...". In those cases the identifier of field "Time" will be "AM/PM" or "Afternoon, morning and/or evening...".

- Likely scenarios

It's also quite likely that the user asked for a contact name before knowing the account to which it belongs, so it'll need to allow the assistant to save name user data in the background until it knows the account name to be able to start the search of the contact. For example: “Create an event with Víctor Sánchez at 9am tomorrow of IBM account..."

- Errors management

Error management is within the probable and foreseeable range of possible scenarios, so we have tried to provoke a series of errors during transcription in order to see how users behave.

Once the errors have been debugged we have noticed that as a general rule users tend to block and restart the transcription, which generates some frustration as they do not know which part is being translated correctly and which is not.

To maintain a sense of control we have chosen to include an edit button that will open a window where you can edit all the transcribed content.

In this phase the objective has to revolve around reviewing our hypothesis and perhaps rethinking some key factors such as: Is it easy enough for users to assimilate and learn? Are we getting the expected results? Is the functionality well identified?

We finished around mid 2019 and it had a high impact when it came to assisting sales people in scheduling events and tasks. It is a functionality that is still being used today and the feedback we have received has been quite positive. There have been later versions that sought to introduce more conditions, but after research we realized that adding more complexity to the interaction made the functionality lose its characteristic of being a quick action.

- Conceptualize new product initiatives

- Collect quant and quali data to support design decisions

- Develop and maintain the design system